Introduction

If you are a small or medium sized business, chances are that you do not have the resources to build, operate and maintain a dedicated private cloud and are leveraging public clouds like Amazon AWS, Google Cloud Platform, Microsoft Azure, or DigitalOcean instead.

Regardless of whether you are in the process of planning to move, or have already executed on your plan to move your services and applications to the cloud, one fundamental consideration is your cloud security strategy.

In his book, author and Google software engineer Dylan Shields presents actionable strategies for security engineers who are tasked with building and securing cloud networks. Security incidents, such as denial-of-service attacks and brute-force SSH attacks, can be prevented if organizations conduct due diligence when it comes to network security. The best way to avoid these attacks, Shields said, is by creating a secure Virtual Private Cloud (VPC), together with isolated subnets and security groups.

Virtual Private Clouds offer the best of both cloud models: they function like a private cloud that run on public or shared infrastructure. However, one caveat of virtual private clouds is that they are - by their very design - inaccessible from the public internet. This creates challenges not only for end-users (especially when working from home) who require access to their application and services in the cloud, but also IT administrators who need to protect the VPC and the backend application, services and resources hosted in the virtual private cloud from outside threats and carry out maintenance tasks.

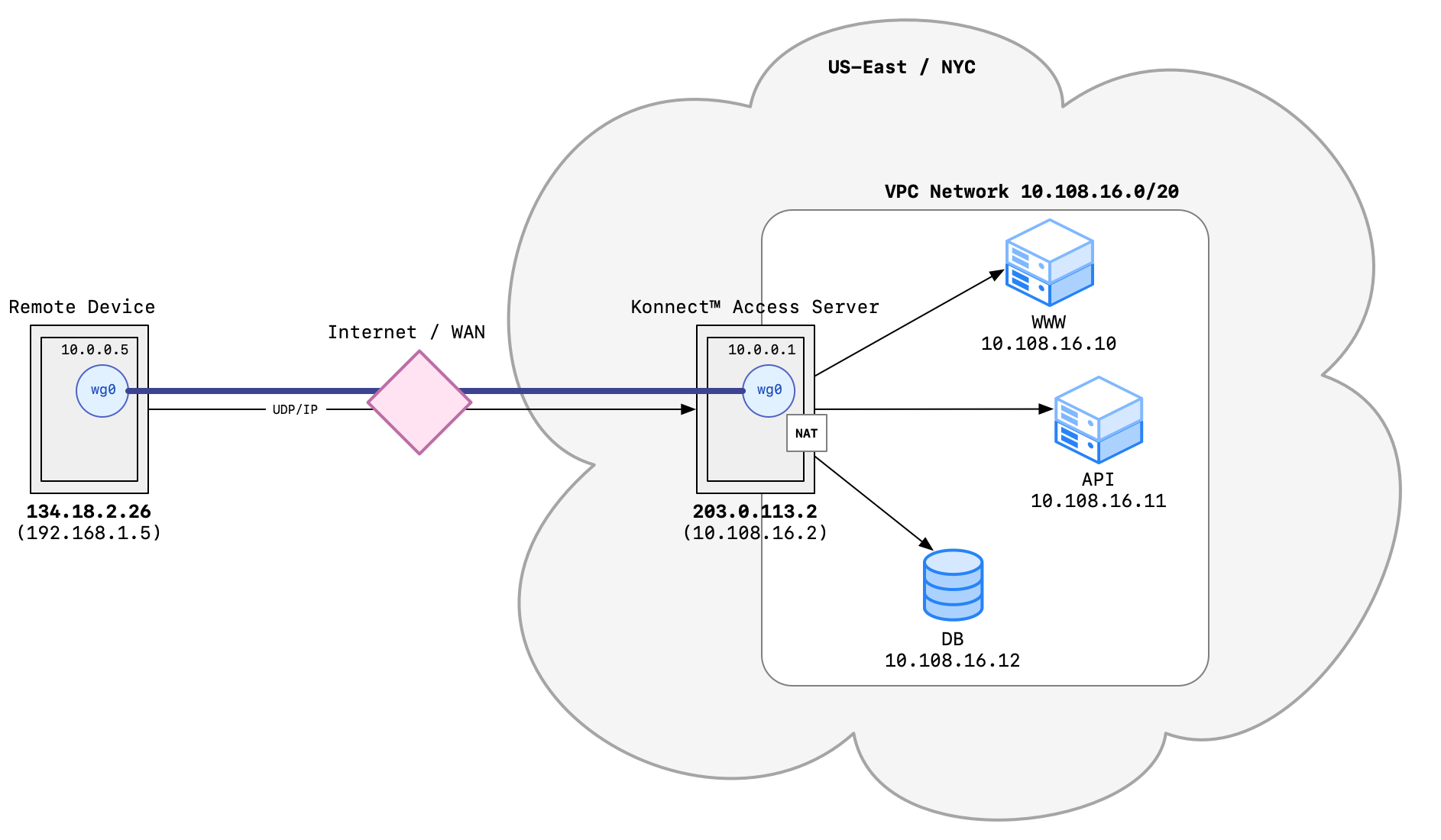

By using KUY.io Konnect™ as a Network Security Gateway (NSG), end-users and administrators can gain secure and convenient access to applications, services and resources deployed in your virtual private cloud.

In this article, we describe how to setup KUY.io Konnect™ access server as an NSG for a DigitalOcean virtual private cloud, however many of the concepts presented there translate to other cloud environment like AWS, GCP, or Azure.

VPC Networks

A virtual private cloud network is a virtual version of a physical network and runs on shared infrastructure just like a public cloud. VPC networks offer isolation for data and network traffic between the customers sharing resources in a public cloud. This layer of isolation is achieved through a private IP subnet or a virtual local area network (VLAN). A VPC network provides full control over the size of your network and the ability to deploy and scale resources at any time. Access is limited to your resources, unless you grant this.

Apart from the security aspect, VPC networks provides numerous other benefits:

- internal private connectivity for your virtual machine (VM) instances, including Kubernetes clusters, PaaS environments, and other cloud resources.

- native internal TCP/UDP load balancing and proxy systems for internal traffic.

- connectivity to on-premises networks using cloud tunnels and cloud interconnects.

- distribution of traffic from external load balancers to backend services.

Each of the big four cloud providers (AWS, GCP, Azure, DigitalOcean), offer VPC network capabilities, and your cloud projects can typically contain multiple VPC networks. Unless you create an organizational policy that prohibits it, new projects usually start with a default network (an auto mode VPC network) that has one subnetwork (subnet) in each region that your provider has a presence in.

The fantastic folks over at DigitalOcean have a great tutorial on setting up and securing your own private VPC network in their public cloud that we will follow closely as part of our build.

What we Will Build

When you create a new cloud project space, all resources deployed to the project space will be directly connected to the internet through a public network interface, commonly provisioned to the resources via a process called cloud-init.

KUY.io Konnect™ can take on the role of a Network Security Gateway (NSG) to protect your Virtual Private Cloud.

To make the project space more secure, we can convert it into a virtual private cloud: all resources by default connect to and communicate with each other through a private VPC network. We split connectivity between our Virtual Private Cloud and the public internet into separate resources: a network gateway that routes packets from the private VPC network to the internet, and KUY.io Konnect™ access server as an access gateway so that external devices can securely connect to the private VPC network.

By deploying KUY.io Konnect™ access server as your NSG, secure outside access is provided to VPN network clients - any user connected to the VPN has access to the backend resources as if they existed on the user's local network.

Setting Up a Project Space

To get started, we creat a new cloud project space called vpc-demo, and add the following cloud resources to the project:

nyc-03-vpc-01: a virtual private network in theNYC-3datacenter of theUS Eastregion, with an IP address range of10.108.16.0/20. The address range is provided in CIDR notatation.nyc-03-gwa network gateway router used by backend resources to access the internet.nyc-03-backend-01a backend resource.nyc-03-nsga KUY.io Konnect™ access server from the DigitalOcean marketplace to act as a Network Security Gateway.

Setting Up an Outbound Gateway

We first connect to nyc-03-gw gateway through an SSH terminal. As we created our gateway resource in the project space with a private VPC network, our cloud provider automatically provisioned multiple network interfaces for the resource that connect to different networks:

Showing the Network Configuration

When we look at the configuration of the network interfaces, we can see that the gateway has two network interfaces, eth0 and eth1.

ifconfig

eth0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 157.245.222.142 netmask 255.255.240.0 broadcast 157.245.223.255

inet6 fe80::8ac:f8ff:fe80:ca9b prefixlen 64 scopeid 0x20<link>

ether 0a:ac:f8:80:ca:9b txqueuelen 1000 (Ethernet)

RX packets 2291 bytes 2671732 (2.6 MB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 610 bytes 73612 (73.6 KB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

eth1: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 10.108.16.2 netmask 255.255.240.0 broadcast 10.108.31.255

inet6 fe80::c444:b3ff:fe76:adf9 prefixlen 64 scopeid 0x20<link>

ether c6:44:b3:76:ad:f9 txqueuelen 1000 (Ethernet)

RX packets 10 bytes 776 (776.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 12 bytes 936 (936.0 B)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

By looking up the routing configuration provisioned onto the machine via cloud-init, we can determine which interface serves as a private interface, and which interface is the public network interface:

route -n

Kernel IP routing table

Destination Gateway Genmask Flags Metric Ref Use Iface

0.0.0.0 157.245.208.1 0.0.0.0 UG 0 0 0 eth0

10.17.0.0 0.0.0.0 255.255.0.0 U 0 0 0 eth0

10.108.16.0 0.0.0.0 255.255.240.0 U 0 0 0 eth1

157.245.208.0 0.0.0.0 255.255.240.0 U 0 0 0 eth0

We see that eth0 has the route to 0.0.0.0 (the internet) and is thus the public network interface, whereas eth1 routes to 10.108.16.0/20, our private VPC network, and is the private network interface.

Configuring NAT Routing from VPC Network to the Internet

As a prerequisite for routing packets between network interface, we need to enable IP forwarding

sysctl -w net.ipv4.ip_forward=1

Next, we install the iptables package from our distribution's package manager (in this case, Ubuntu with apt as the package manager):

sudo apt-get update

sudo apt-get install iptables

With the iptables package in place, we can add our traffic translation rules:

iptables -t nat -A POSTROUTING -s 10.108.16.0/20 -o eth0 -j MASQUERADE

The only thing left to do for the network gateway is to persist our network translation rules so they are applied when the gateway reboots:

sudo apt-get install iptables-persistent

we answer "Yes" to saving the current IPV4 rules.

Setting Up a Backend Resource

To log in to the backend resource nyc-03-backend-01, we will not ssh in directly, but instead connect through an SSH proxy connection tunnel through the gateway resource that we just configured.

Important: We need to use a ssh proxy connection, so we don't loose network connectivity when we disable the public network interface in the backend resource.

ssh -o ProxyCommand="ssh -W %h:%p root@{public_IP_of_gateway}" root@{private_IP_of_backend}

Network Configuration

We first need to determine which network interfaces in our backend resource act as the private and public interfaces.

First, let's determine which network interfaces exist:

ifconfig

eth0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 167.71.246.166 netmask 255.255.240.0 broadcast 167.71.255.255

inet6 fe80::b8c3:e8ff:fed6:779c prefixlen 64 scopeid 0x20<link>

ether ba:c3:e8:d6:77:9c txqueuelen 1000 (Ethernet)

RX packets 1023 bytes 2603071 (2.6 MB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 631 bytes 66023 (66.0 KB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

eth1: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 10.108.16.3 netmask 255.255.240.0 broadcast 10.108.31.255

inet6 fe80::dc06:89ff:fe70:1169 prefixlen 64 scopeid 0x20<link>

ether de:06:89:70:11:69 txqueuelen 1000 (Ethernet)

RX packets 866 bytes 182403 (182.4 KB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 986 bytes 141809 (141.8 KB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

Then we can use the route information to determine private and public interfaces.

route -n

Kernel IP routing table

Destination Gateway Genmask Flags Metric Ref Use Iface

0.0.0.0 167.71.240.1 0.0.0.0 UG 0 0 0 eth0

10.17.0.0 0.0.0.0 255.255.0.0 U 0 0 0 eth0

10.108.16.0 0.0.0.0 255.255.240.0 U 0 0 0 eth1

167.71.240.0 0.0.0.0 255.255.240.0 U 0 0 0 eth0

Once again, eth0 is our public interface and eth1 our private interface. But also, take not of the last line of output: the default gateway to the cloud provider network is 167.71.240.1 via the eth0 interface. We will require this information in a moment to ensure we keep connected to the cloud provider's meta-data service when we disconnect the public network interface.

Adding the Metadata Service Route

We create a new routing entry that tells our backend resource to connect to the metadata service at 169.254.169.254 through the cloud provider network gateway 167.71.240.1:

ip route add 169.254.169.254 via 167.71.240.1 dev eth0

Changing The Default Route To Our VPC Gateway

With the metadata service route in place, we can now change default route for the backend resource to access the internet via the private network interface of our gateway at 10.108.16.2:

ip route change default via 10.108.16.2

Verifying Route Assignments

To make sure that everything is working as intended, we first print the new routing tables:

route -n

Kernel IP routing table

Destination Gateway Genmask Flags Metric Ref Use Iface

0.0.0.0 10.108.16.2 0.0.0.0 UG 0 0 0 eth1

10.17.0.0 0.0.0.0 255.255.0.0 U 0 0 0 eth0

10.108.16.0 0.0.0.0 255.255.240.0 U 0 0 0 eth1

167.71.240.0 0.0.0.0 255.255.240.0 U 0 0 0 eth0

169.254.169.254 167.71.240.1 255.255.255.255 UGH 0 0 0 eth0

Unfortunately, this routing configuration would be lost at restart. To persist the configuration, we need to modify the network configuration of the backend resource. For DigitalOcean droplets, we do this by editing /etc/netplan/50-cloud-init.yaml. If you are following along this tutorial with a different cloud provider, please consult your cloud provider documentation to find the location of your network configuration files.

By default the network configuration looks like this:

# This file is generated from information provided by the datasource. Changes

# to it will not persist across an instance reboot. To disable cloud-init's

# network configuration capabilities, write a file

# /etc/cloud/cloud.cfg.d/99-disable-network-config.cfg with the following:

# network: {config: disabled}

network:

version: 2

ethernets:

eth0:

addresses:

- 167.71.246.166/20

- 10.17.0.6/16

gateway4: 167.71.240.1

match:

macaddress: ba:c3:e8:d6:77:9c

nameservers:

addresses:

- 67.207.67.2

- 67.207.67.3

search: []

set-name: eth0

eth1:

addresses:

- 10.108.16.3/20

match:

macaddress: de:06:89:70:11:69

nameservers:

addresses:

- 67.207.67.2

- 67.207.67.3

search: []

set-name: eth1

To make our backend resource private to our VPC, we need to remove the gateway4 configuration line, and add a route to the eth1 interface:

# This file is generated from information provided by the datasource. Changes

# to it will not persist across an instance reboot. To disable cloud-init's

# network configuration capabilities, write a file

# /etc/cloud/cloud.cfg.d/99-disable-network-config.cfg with the following:

# network: {config: disabled}

network:

version: 2

ethernets:

eth0:

addresses:

- 167.71.246.166/20

- 10.17.0.6/16

match:

macaddress: ba:c3:e8:d6:77:9c

nameservers:

addresses:

- 67.207.67.2

- 67.207.67.3

search: []

set-name: eth0

eth1:

addresses:

- 10.108.16.3/20

match:

macaddress: de:06:89:70:11:69

routes:

- to: 0.0.0.0/0

via: 10.108.16.2

nameservers:

addresses:

- 67.207.67.2

- 67.207.67.3

search: []

set-name: eth1

After saving the changes we can apply the new network configuration with netplan apply -debug. The -debug flag will alert us if we made any formatting errors in the yml file.

Verifying Connectivity

Our backend resource should still be able to connect to the internet. However, it will no longer use its eth0 public network interface to do so, but should connect through our network gateway's private interface instead.

ping google.com

PING google.com (142.250.65.174) 56(84) bytes of data.

64 bytes from lga25s71-in-f14.1e100.net (142.250.65.174): icmp_seq=1 ttl=117 time=4.46 ms

64 bytes from lga25s71-in-f14.1e100.net (142.250.65.174): icmp_seq=2 ttl=117 time=2.51 ms

We can validate that network packets are routed via our private interface eth1 through the gateway:

ip route get 8.8.8.8

8.8.8.8 via 10.108.16.2 dev eth1 src 10.108.16.3 uid 0

cache

Deploying KUY.io Konnect™ access server as a Network Security Gateway

To get started with using KUY.io Konnect™ access server as your network security gateway, you can deploy a new KUY.io Konnect™ server instance directly from the DigitalOcean marketplace directly to your VPC network.

We then connect to the KUY.io Konnect™ instance via ssh to complete our configuration.

Network Interfaces

When you inspect the network interfaces of your KUY.io Konnect™ server, you can see a few interface that we had not seen before with the other resources:

ifconfig

br-0d564d9999a7: flags=4099<UP,BROADCAST,MULTICAST> mtu 1500

inet 172.18.0.1 netmask 255.255.0.0 broadcast 172.18.255.255

ether 02:42:4e:40:cd:2a txqueuelen 0 (Ethernet)

RX packets 0 bytes 0 (0.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 0 bytes 0 (0.0 B)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

docker0: flags=4099<UP,BROADCAST,MULTICAST> mtu 1500

inet 172.17.0.1 netmask 255.255.0.0 broadcast 172.17.255.255

ether 02:42:ff:50:fc:ca txqueuelen 0 (Ethernet)

RX packets 0 bytes 0 (0.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 0 bytes 0 (0.0 B)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

eth0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 157.245.217.173 netmask 255.255.240.0 broadcast 157.245.223.255

inet6 fe80::8024:d6ff:fe0f:500 prefixlen 64 scopeid 0x20<link>

ether 82:24:d6:0f:05:00 txqueuelen 1000 (Ethernet)

RX packets 5608 bytes 105609078 (105.6 MB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 3300 bytes 278002 (278.0 KB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

eth1: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 10.108.16.4 netmask 255.255.240.0 broadcast 10.108.31.255

inet6 fe80::307c:ceff:fefc:80aa prefixlen 64 scopeid 0x20<link>

ether 32:7c:ce:fc:80:aa txqueuelen 1000 (Ethernet)

RX packets 9 bytes 706 (706.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 10 bytes 796 (796.0 B)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

Here, br-0d564d9999a7 is the bridge network for the KUY.io Konnect™ server application stack, eth0 is our public interface, and eth1 is our private interface.

We can also see this reflected in the routes:

route -n

Kernel IP routing table

Destination Gateway Genmask Flags Metric Ref Use Iface

0.0.0.0 157.245.208.1 0.0.0.0 UG 0 0 0 eth0

10.17.0.0 0.0.0.0 255.255.0.0 U 0 0 0 eth0

10.108.16.0 0.0.0.0 255.255.240.0 U 0 0 0 eth1

157.245.208.0 0.0.0.0 255.255.240.0 U 0 0 0 eth0

172.17.0.0 0.0.0.0 255.255.0.0 U 0 0 0 docker0

172.18.0.0 0.0.0.0 255.255.0.0 U 0 0 0 br-0d564d9999a7

Starting KUY.io Konnect™ access server

To start KUY.io Konnect™ access server, please follow the steps described in the Quickstart Guide.

cd /opt/konnect

docker-compose up

During the initial configuration wizard, we can leave all settings as default: there are no special requirements to enable KUY.io Konnect™ access server as our NSG and connectivity works out of the box. When the configuration wizard is completed, set up a VPN device for your administrator user to verify everything is working as intended.

Securely connecting to your VPC cloud network

After configuring and starting your client device VPN connection, it should be listed in the VPN client as Active and we can validate network connectivity as follows.

First, we should be able to ping google.com from the VPN client device while connected to the VPN:

ping google.com

PING google.com (142.250.64.110): 56 data bytes

64 bytes from 142.250.64.110: icmp_seq=0 ttl=116 time=22.522 ms

64 bytes from 142.250.64.110: icmp_seq=1 ttl=116 time=21.614 ms

Next, we should see all traffic routed through a utun device (if you are on a Mac):

route get 8.8.8.8

route to: dns.google

destination: default

mask: default

interface: utun6

flags: <UP,DONE,CLONING,STATIC>

recvpipe sendpipe ssthresh rtt,msec rttvar hopcount mtu expire

0 0 0 0 0 0 1420 0

Finally, we should be able to ping our KUY.io Konnect™ server instance via its VPN IP 10.0.0.1 - if you kept the default config during the configuration wizard.

ping 10.0.0.1

PING 10.0.0.1 (10.0.0.1): 56 data bytes

64 bytes from 10.0.0.1: icmp_seq=0 ttl=64 time=20.378 ms

64 bytes from 10.0.0.1: icmp_seq=1 ttl=64 time=22.049 ms

Lastly, we should be able to ping the private IP of our backend resource while connected to the VPN:

ping 10.108.16.3

PING 10.108.16.3 (10.108.16.3): 56 data bytes

64 bytes from 10.108.16.3: icmp_seq=0 ttl=62 time=28.462 ms

64 bytes from 10.108.16.3: icmp_seq=1 ttl=62 time=20.510 ms

Summary

When setting up and securing your Virtual Private Cloud projects, using KUY.io Konnect™ access server as a Network Security Gateway is a fantastic option to give end-users and administrators fast, easy, and secure access to your backend resources, services and application with a secure private VPN tunnel powered by the WireGuard® protocol. KUY.io Konnect™ server deploys in less than five minutes and provides out-of-the-box connectivity to both the internet and your VPC resources without requiring additional configuration.